It's been a while since I blogged... so, be warned I've just gotten more opinionated.

Threat Intelligence - one of the more recent buzz words in InfoSec (Cyber Security (yes, I know what word I used)).

What is TI? Who does it? How does one do it properly? What are the inputs? What are the outputs (products)? Can non-IC people learn the tradecraft and do it even remotely properly? -- Lots of questions regarding TI, and it seems that I am not alone in trying to figure it out.

What I know it's not: indicator feeds! We need to get out of the mindset that TI is just streams of indicators with no context! If you're given an IP address, alone with no context, how much can you figure out from just an IP address? Yes, with tools you can find out different things like Registrar, ASN, pDNS, etc. But do you know if you should be looking for traffic inbound to it or outbound? Do you know if one behavior is normal and the other is abnormal? Answer = most likely not.

How do we move the perception of TI to it not being just sharing contextual-less indicator wrangling?

I've seen a few different points of security talked about in a few different forms, from Defense in Depth, NSM, the Pyramid of Pain, and so on. One of the keys to executing on these models of security is knowing your environment. When I saying knowing it, I mean understanding both business functions, critical functions (business and IT), applications(systems/platforms/etc. (the glue that makes the business work), and mitigating controls intimately. Or at least with enough assurance that you can understand what is going on, and where you need to get better visibility/instrumentation.

When working on Security and Threat Intelligence I realized that a fair amount of orgs/Cos need to work on basic Data Intelligence before they start working up to Threat Intelligence. I went to a pyramid, which I'll need to draw up.

Steps (reverse for pyramid foundation to top):

1. Data Intelligence - log/app/event collection, configure to catch what you need

2. Operational/Business Intelligence - using log/app/event to improve Ops/Biz functions (keep the lights on)

3. Security Intelligence - Feed the SIEM for SOC/CIRT, build baselines, understand environment

4. Threat Intelligence - Advance SI to link internal/external threats to the intelligence stack

Is this possible? Yes. Will it be in constant flux? Of course; we have humans running the damn thing, making decisions, and changes.

Is this Threat Intelligence? Nope, but it is what I have in mind on building up to it.

@demon117

Demon117 Security

Monday, November 23, 2015

Thursday, July 24, 2014

URL Analysis - a [semi] data driven approach

--------------------------------------

Been a while since I sat down and posted something here... Still slaving away at the grindstone, but having fun and doing something I enjoy (shhh... don't let my bosses know though).

I don't think I've finished any of the projects I've been working on either, basically have the same list with more added... though the rate they've been adding has slowed down a little, or increased. Who knows.

--------------------------------------

URL analysis, something I've been working on since I first started trying to build pattern matches for malicious websites back in 2010-2011, and ever since then. This can be both easy and tricky.

Easy: Well, easier if you know Regular Expressions (RegEx/RE/PCRE) and know how to finesse the pattern for the tool you are using. There can be a bit of variance between products, Splunk understands RegEx a little differently than Suricata or Snort, and so on. So, figure out what you have to write to. Additionally, take advantage of other people's RegEx, what they are writing for and to. I highly recommend looking at the rules the awesome people of the Emerging Threats community have put out, that's where I've learned more about pattern matching and refinement.

I recommend pulling down their latest rule set here: rules.emergingthreats.net. I like pulling down the allrules file and then using grep to find the rules I am looking for.

An example of extracting the PCREs for (my favorite exploit kit) Fiesta kit:

grep -i 'fiesta' emerging-all.rules | egrep -o 'pcre:".*"'

This will spit out just the PCRE bits from the file after finding all the matches for Fiesta. It can probably be improved, but it works.

Tricky: This is where approach and having a good sized data set to play with is extremely important. Building RE matches against malicious URLs is tricky, depending on the deviousness of the miscreants, because they like to blend in or make their deviance hard to match on, meaning it either is terribly dynamic and constantly changes lengths and various things so a solid match, or even anchor, can be hard to find. Or they like to blend in with common web traffic, so figuring out how to extract the badness from the good is time consuming.

As I've written about my (and friends) work on tracking and writing signatures to match neosploit aka Fiesta Kit there is hopefully a marked growth or maturation of sample data and matching.

A quick aside on tools, there are certain things that must be taken into account when building Snort/Suricata signatures, that are able to slide in something like Splunk. Anchoring on a string of text, one of the frustrations with Fiesta Kit was not really having anything to anchor off of, was a pain and made for rough and intense rules for the IDS engine. Splunk, however, will generally accept poorly written searches and hopefully it will kill a poorly written search and RE combination, if it can't muscle through it. Ugh, I've written a few doozies hunting Fiesta in my time. And, though I thought I was following the 'start small, get bigger with testing', I was definitely not doing as well as I thought. Yay for not melting servers.

Yes, I am rambling...

Recently, I've had the pleasure of seeing some ninjas in action when it comes to hunting miscreants and doing good things on the Internet. One of the challenges I have enjoyed is trying to attack parts of the chain of events when it comes to exploit kits, always good to have most of the kits stopped before the user is exposed, but there is a good amount of data to be gathered even though the incident has been thwarted.

(Warning, still very much a python noob... so the following idea isn't magic of any sort)

Something that I have been working on is a python script that I can feed parts of or entire URLs to find the lengths of. Why? When sifting through dynamically generated crap that is attempting to hide amongst annoying but also dynamically generated (hopefully) innocent traffic I end up dealing with wide ranges in traffic types. Being able to narrow down my match to be specific enough to have at least low false positive rates gives me something to go on, and not be too embarrassed to share. (Who am I kidding, I'm still a noob, and if I can get something that matches and I am hitting a brick wall on refining I will share it to let the Pros and ninjas go to work.)

I need to work on the script some more to be able to tailor it to the data I have, and then, be able to spit out both usable data to my own purposes and sharing. Right now I can take one field, calculate the length, and then using the tools the Unix Gods bestowed on us (sort/uniq/cat/sed/awk/less) I can find the ranges based on the malicious traffic. Simple, but important. Being able to determine what is a EK gate versus an analytics site traffic is the difference of driving your SOC mad with false positives, and solid intel on what legit sites have been popped, or ad rotators with bad content, and are now sending users to bad places.

Learning how to first build solid network connections with good people who share good info, pivoting off of it in your own environment, gathering as much as you can, seeing if you can improve (expand and tighten) the patterns, and most importantly share back.

It is one of the best feelings and experiences to be able to share back to the community to help others protect others from the evils of malicious miscreants.

Find things, build matches, share and be awesome!

Happy hunting!

-Demon117

@demon117

Been a while since I sat down and posted something here... Still slaving away at the grindstone, but having fun and doing something I enjoy (shhh... don't let my bosses know though).

I don't think I've finished any of the projects I've been working on either, basically have the same list with more added... though the rate they've been adding has slowed down a little, or increased. Who knows.

--------------------------------------

URL analysis, something I've been working on since I first started trying to build pattern matches for malicious websites back in 2010-2011, and ever since then. This can be both easy and tricky.

Easy: Well, easier if you know Regular Expressions (RegEx/RE/PCRE) and know how to finesse the pattern for the tool you are using. There can be a bit of variance between products, Splunk understands RegEx a little differently than Suricata or Snort, and so on. So, figure out what you have to write to. Additionally, take advantage of other people's RegEx, what they are writing for and to. I highly recommend looking at the rules the awesome people of the Emerging Threats community have put out, that's where I've learned more about pattern matching and refinement.

I recommend pulling down their latest rule set here: rules.emergingthreats.net. I like pulling down the allrules file and then using grep to find the rules I am looking for.

An example of extracting the PCREs for (my favorite exploit kit) Fiesta kit:

grep -i 'fiesta' emerging-all.rules | egrep -o 'pcre:".*"'

This will spit out just the PCRE bits from the file after finding all the matches for Fiesta. It can probably be improved, but it works.

Tricky: This is where approach and having a good sized data set to play with is extremely important. Building RE matches against malicious URLs is tricky, depending on the deviousness of the miscreants, because they like to blend in or make their deviance hard to match on, meaning it either is terribly dynamic and constantly changes lengths and various things so a solid match, or even anchor, can be hard to find. Or they like to blend in with common web traffic, so figuring out how to extract the badness from the good is time consuming.

As I've written about my (and friends) work on tracking and writing signatures to match neosploit aka Fiesta Kit there is hopefully a marked growth or maturation of sample data and matching.

A quick aside on tools, there are certain things that must be taken into account when building Snort/Suricata signatures, that are able to slide in something like Splunk. Anchoring on a string of text, one of the frustrations with Fiesta Kit was not really having anything to anchor off of, was a pain and made for rough and intense rules for the IDS engine. Splunk, however, will generally accept poorly written searches and hopefully it will kill a poorly written search and RE combination, if it can't muscle through it. Ugh, I've written a few doozies hunting Fiesta in my time. And, though I thought I was following the 'start small, get bigger with testing', I was definitely not doing as well as I thought. Yay for not melting servers.

Yes, I am rambling...

Recently, I've had the pleasure of seeing some ninjas in action when it comes to hunting miscreants and doing good things on the Internet. One of the challenges I have enjoyed is trying to attack parts of the chain of events when it comes to exploit kits, always good to have most of the kits stopped before the user is exposed, but there is a good amount of data to be gathered even though the incident has been thwarted.

(Warning, still very much a python noob... so the following idea isn't magic of any sort)

Something that I have been working on is a python script that I can feed parts of or entire URLs to find the lengths of. Why? When sifting through dynamically generated crap that is attempting to hide amongst annoying but also dynamically generated (hopefully) innocent traffic I end up dealing with wide ranges in traffic types. Being able to narrow down my match to be specific enough to have at least low false positive rates gives me something to go on, and not be too embarrassed to share. (Who am I kidding, I'm still a noob, and if I can get something that matches and I am hitting a brick wall on refining I will share it to let the Pros and ninjas go to work.)

I need to work on the script some more to be able to tailor it to the data I have, and then, be able to spit out both usable data to my own purposes and sharing. Right now I can take one field, calculate the length, and then using the tools the Unix Gods bestowed on us (sort/uniq/cat/sed/awk/less) I can find the ranges based on the malicious traffic. Simple, but important. Being able to determine what is a EK gate versus an analytics site traffic is the difference of driving your SOC mad with false positives, and solid intel on what legit sites have been popped, or ad rotators with bad content, and are now sending users to bad places.

Learning how to first build solid network connections with good people who share good info, pivoting off of it in your own environment, gathering as much as you can, seeing if you can improve (expand and tighten) the patterns, and most importantly share back.

It is one of the best feelings and experiences to be able to share back to the community to help others protect others from the evils of malicious miscreants.

Find things, build matches, share and be awesome!

Happy hunting!

-Demon117

@demon117

Sunday, November 24, 2013

thinking and analysis

First off, going to pull what may be a stupid wannabe blogger move and mix in some speculation with a lot of opinion... hopefully it works, if not... let me know.

Analysis... it's interesting, necessary for life and survival... but as natural as it may be on a day to day basis, security analysis is a little different for most of us. Fact of the matter is, there are many facets of life that require thinking or analysis that is more difficult than just regular every day 2nd nature thinking.

Last week I heard in a presentation, well multiple presentations about Security Intelligence and Operations. Organizations around the world are pitched in battle and are faced with challenges that range from hiring analysts to training to fighting the battles they are hired for.

There are many questions that management is asking about analysts, and from the sounds of it they are centered primarily around hiring... One of the constants of Ops is the ever rotating door...

The question I am asking myself is how do we build solid analysts that can link security concepts with tools and open their minds to do awesome analysis, or just quality analysis versus assumption.

Okay, time to stop my mentally meandering and get to the point.

I am going to sit down and read two books, well finish one and consume the other.

'Thinking Fast and Slow' by Daniel Kahneman

And

'Psychology of Intelligence Analysis' by Richards J. Heuer Jr. [pdf here]

Both of these books have to do with thinking, and I am under the impression that both have something to do with helping us understand when we are acting off of assumptions and when we are doing analysis.

I am going to see if I can find and then illustrate a link between the two writings. My premise for this is based on Thinking and Fast and Slow and the two systems we as humans operate with. Then linking that to Heuer's writing on what I believe is doing analysis and being able to separate the assumption from analysis.

This will take a bit as I have to finish both books.

- Paul

Analysis... it's interesting, necessary for life and survival... but as natural as it may be on a day to day basis, security analysis is a little different for most of us. Fact of the matter is, there are many facets of life that require thinking or analysis that is more difficult than just regular every day 2nd nature thinking.

Last week I heard in a presentation, well multiple presentations about Security Intelligence and Operations. Organizations around the world are pitched in battle and are faced with challenges that range from hiring analysts to training to fighting the battles they are hired for.

There are many questions that management is asking about analysts, and from the sounds of it they are centered primarily around hiring... One of the constants of Ops is the ever rotating door...

The question I am asking myself is how do we build solid analysts that can link security concepts with tools and open their minds to do awesome analysis, or just quality analysis versus assumption.

Okay, time to stop my mentally meandering and get to the point.

I am going to sit down and read two books, well finish one and consume the other.

'Thinking Fast and Slow' by Daniel Kahneman

And

'Psychology of Intelligence Analysis' by Richards J. Heuer Jr. [pdf here]

Both of these books have to do with thinking, and I am under the impression that both have something to do with helping us understand when we are acting off of assumptions and when we are doing analysis.

I am going to see if I can find and then illustrate a link between the two writings. My premise for this is based on Thinking and Fast and Slow and the two systems we as humans operate with. Then linking that to Heuer's writing on what I believe is doing analysis and being able to separate the assumption from analysis.

This will take a bit as I have to finish both books.

- Paul

Saturday, November 9, 2013

Data Wrangling – Splunk & CIM

Data… there is a lots of it… Now we can store it, well, we’ve been

able to for a while, but it’s catching on that lots of data is good, and

making it useful is awesome!

I get to play with a little data, it’s miniscule in comparison with some, but it’s what I get to play with… so I am learning about the things I can do. One of the tools I get to use is Splunk, I’ve had the opportunity to shape and mold the “data” so that it’s more than just unstructured data. It’s useable data with some tuning, but as far as I can tell, you really do have to put time and effort into Splunk to “train it”. Let me be clear, I’ve yet to see Splunk do something that looks intelligent other than Key:Value pair extraction out of data… and that’s debatable on being useful.

I have a vision, and right now I am trying to understand if it’s a commonly shared vision using what the Splunk people call the Common Information Model (link here). My vision, helped along by a friend and mentor, as well as seeing what people have done in more advanced correlation systems is to build essentially a web of linking points of data within all of the various events and log types I have access too.

Think of this from a security analyst standpoint:

Event comes in, they see it in the SIEM or the ES App and start to dig in… Asking the question of what else does this show up in or as? Building a search based off of src_ip=”IP in question” OR dest_ip=”IP in question”… (Two points on this, still pre-Splunk6 and lets say we cleverly specify the index via config magic for the role in the app)… What do you think will happen?

What I am pushing for is to make it so all sources, sourcetypes, and sub-sourcetypes that have a component that is a “source ip address” or a “destination ip address” is checked for this IP. If it has hits, it shows up in the search. Yes, this is not a super rare term search, that’s the point of the search, it’s not supposed to be. It does however provide the analyst with the ability to dig in to all of the sourcetypes that have hits, allowing further drilling down in various searches to extract and pivot through the data in various periods of time to see where this IP has interacted with the network.

Like:

User VPN’s into company -> AV events occur -> Logs into a meeting -> Logs into an application server -> etc. etc.

Being able to correlate a single users path through various log sources is key to seeing what all that user has done in the period of time visible to the security analyst. Making it easier for them to pick up a bread crumb, whether it be in the middle of the trail or at any point, and finding out about the who|what|why|when parts of deciding if it’s an incident or not.

I have no idea how many people/organizations are leveraging the power of CIM (Common information model), or if I am just being slow to get on board with this.

My experience with CIM is having it pointed out by a friend/mentor, and then trying to hold the people working on Splunk to it.

I get to play with a little data, it’s miniscule in comparison with some, but it’s what I get to play with… so I am learning about the things I can do. One of the tools I get to use is Splunk, I’ve had the opportunity to shape and mold the “data” so that it’s more than just unstructured data. It’s useable data with some tuning, but as far as I can tell, you really do have to put time and effort into Splunk to “train it”. Let me be clear, I’ve yet to see Splunk do something that looks intelligent other than Key:Value pair extraction out of data… and that’s debatable on being useful.

I have a vision, and right now I am trying to understand if it’s a commonly shared vision using what the Splunk people call the Common Information Model (link here). My vision, helped along by a friend and mentor, as well as seeing what people have done in more advanced correlation systems is to build essentially a web of linking points of data within all of the various events and log types I have access too.

Think of this from a security analyst standpoint:

Event comes in, they see it in the SIEM or the ES App and start to dig in… Asking the question of what else does this show up in or as? Building a search based off of src_ip=”IP in question” OR dest_ip=”IP in question”… (Two points on this, still pre-Splunk6 and lets say we cleverly specify the index via config magic for the role in the app)… What do you think will happen?

What I am pushing for is to make it so all sources, sourcetypes, and sub-sourcetypes that have a component that is a “source ip address” or a “destination ip address” is checked for this IP. If it has hits, it shows up in the search. Yes, this is not a super rare term search, that’s the point of the search, it’s not supposed to be. It does however provide the analyst with the ability to dig in to all of the sourcetypes that have hits, allowing further drilling down in various searches to extract and pivot through the data in various periods of time to see where this IP has interacted with the network.

Like:

User VPN’s into company -> AV events occur -> Logs into a meeting -> Logs into an application server -> etc. etc.

Being able to correlate a single users path through various log sources is key to seeing what all that user has done in the period of time visible to the security analyst. Making it easier for them to pick up a bread crumb, whether it be in the middle of the trail or at any point, and finding out about the who|what|why|when parts of deciding if it’s an incident or not.

I have no idea how many people/organizations are leveraging the power of CIM (Common information model), or if I am just being slow to get on board with this.

My experience with CIM is having it pointed out by a friend/mentor, and then trying to hold the people working on Splunk to it.

Thursday, March 21, 2013

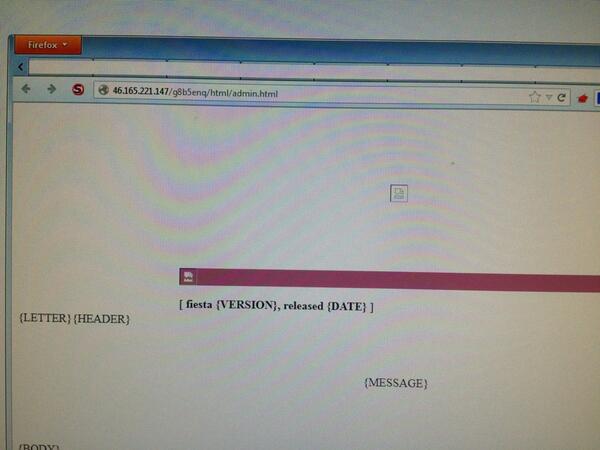

Fiesta - Not NeoSploit I was wrong

I was wrong... what I thought was NeoSploit, but never saw an admin panel for is not NeoSploit, it is Fiesta.

As posted on twitter here is an image of fiesta...

To access the panel go to /sub-directory/html/admin.html

Lame post I know... but I will be trying to pick up on research and posting as I can.

I think URLQuery is awesome! And will be trying to find ways to dig through that data set to ferret out more fiesta.

-Demon

As posted on twitter here is an image of fiesta...

To access the panel go to /sub-directory/html/admin.html

Lame post I know... but I will be trying to pick up on research and posting as I can.

I think URLQuery is awesome! And will be trying to find ways to dig through that data set to ferret out more fiesta.

-Demon

Tuesday, September 11, 2012

NeoSploit serving two exploits

While tracking NeoSploit it has been interesting to see behavioral changes in the kit, from varying the landing page or the sequence of how the kit delivers victims to the exploit.

NeoSploit, unlike the Blackhole kit, will serve up the exploit multiple times to the victim before the compromise occurs. Why does this happen? I have no idea, but in the future will hopefully find out.

Generally I see NeoSploit serve up a single exploit 3-5 times, this behavior has been adapted recently with addition of the new Java 0-day (referenced here by Daryl at Kahu Security). Now it looks like the kit is serving up two exploits, both served up multiple times to the victim.

NeoSploit, unlike the Blackhole kit, will serve up the exploit multiple times to the victim before the compromise occurs. Why does this happen? I have no idea, but in the future will hopefully find out.

Generally I see NeoSploit serve up a single exploit 3-5 times, this behavior has been adapted recently with addition of the new Java 0-day (referenced here by Daryl at Kahu Security). Now it looks like the kit is serving up two exploits, both served up multiple times to the victim.

2012-09-10T19:36:13 200 text/html 513:7947 GET hxxp://minigamesobihais[.]org/gf3ztv8/?2

2012-09-10T19:36:18 200 application/octet-stream 373:3757 GET hxxp://minigamesobihais[.]org/gf3ztv8/?659fdb537a47b1d90651585d56590d0406010f5d5000050803050d0350530105

2012-09-10T19:36:18 200 application/octet-stream 373:6623 GET hxxp://minigamesobihais[.]org/gf3ztv8/?4e466b7c405c14f15606420d04590f540451020d020007580155005302530355

2012-09-10T19:36:19 200 application/octet-stream 330:3757 GET hxxp://minigamesobihais[.]org/gf3ztv8/?659fdb537a47b1d90651585d56590d0406010f5d5000050803050d0350530105

2012-09-10T19:36:19 200 application/octet-stream 330:6623 GET hxxp://minigamesobihais[.]org/gf3ztv8/?4e466b7c405c14f15606420d04590f540451020d020007580155005302530355

2012-09-10T19:36:33 200 application/octet-stream 373:3757 GET hxxp://minigamesobihais[.]org/gf3ztv8/?659fdb537a47b1d90651585d56590d0406010f5d5000050803050d0350530105

2012-09-10T19:36:33 200 application/octet-stream 373:6623 GET hxxp://minigamesobihais[.]org/gf3ztv8/?4e466b7c405c14f15606420d04590f540451020d020007580155005302530355

2012-09-10T19:36:34 200 application/octet-stream 373:3757 GET hxxp://minigamesobihais[.]org/gf3ztv8/?659fdb537a47b1d90651585d56590d0406010f5d5000050803050d0350530105

2012-09-10T19:36:34 200 application/octet-stream 373:6623 GET hxxp://minigamesobihais[.]org/gf3ztv8/?4e466b7c405c14f15606420d04590f540451020d020007580155005302530355

2012-09-10T19:36:35 200 application/octet-stream 373:6623 GET hxxp://minigamesobihais[.]org/gf3ztv8/?4e466b7c405c14f15606420d04590f540451020d020007580155005302530355

2012-09-10T19:36:36 200 application/octet-stream 298:161052 GET hxxp://minigamesobihais[.]org/gf3ztv8/?2826a54d96e538d05740570d530e0c53020c040d5557045f0708065355040052;1;1

2012-09-10T19:36:37 200 text/html 300:226 GET hxxp://minigamesobihais[.]org/gf3ztv8/?2826a54d96e538d05740570d530e0c53020c040d5557045f0708065355040052;1;1;1

2012-09-10T19:36:18 200 application/octet-stream 373:3757 GET hxxp://minigamesobihais[.]org/gf3ztv8/?659fdb537a47b1d90651585d56590d0406010f5d5000050803050d0350530105

2012-09-10T19:36:18 200 application/octet-stream 373:6623 GET hxxp://minigamesobihais[.]org/gf3ztv8/?4e466b7c405c14f15606420d04590f540451020d020007580155005302530355

2012-09-10T19:36:19 200 application/octet-stream 330:3757 GET hxxp://minigamesobihais[.]org/gf3ztv8/?659fdb537a47b1d90651585d56590d0406010f5d5000050803050d0350530105

2012-09-10T19:36:19 200 application/octet-stream 330:6623 GET hxxp://minigamesobihais[.]org/gf3ztv8/?4e466b7c405c14f15606420d04590f540451020d020007580155005302530355

2012-09-10T19:36:33 200 application/octet-stream 373:3757 GET hxxp://minigamesobihais[.]org/gf3ztv8/?659fdb537a47b1d90651585d56590d0406010f5d5000050803050d0350530105

2012-09-10T19:36:33 200 application/octet-stream 373:6623 GET hxxp://minigamesobihais[.]org/gf3ztv8/?4e466b7c405c14f15606420d04590f540451020d020007580155005302530355

2012-09-10T19:36:34 200 application/octet-stream 373:3757 GET hxxp://minigamesobihais[.]org/gf3ztv8/?659fdb537a47b1d90651585d56590d0406010f5d5000050803050d0350530105

2012-09-10T19:36:34 200 application/octet-stream 373:6623 GET hxxp://minigamesobihais[.]org/gf3ztv8/?4e466b7c405c14f15606420d04590f540451020d020007580155005302530355

2012-09-10T19:36:35 200 application/octet-stream 373:6623 GET hxxp://minigamesobihais[.]org/gf3ztv8/?4e466b7c405c14f15606420d04590f540451020d020007580155005302530355

2012-09-10T19:36:36 200 application/octet-stream 298:161052 GET hxxp://minigamesobihais[.]org/gf3ztv8/?2826a54d96e538d05740570d530e0c53020c040d5557045f0708065355040052;1;1

2012-09-10T19:36:37 200 text/html 300:226 GET hxxp://minigamesobihais[.]org/gf3ztv8/?2826a54d96e538d05740570d530e0c53020c040d5557045f0708065355040052;1;1;1

I was able to grab the exploits from this interaction and run them through Virus Total.

First up:

659fdb537a47b1d90651585d56590d0406010f5d5000050803050d0350530105

1/42 detections

AhnLab-V3 with Java/Cve-2012-1723

Older but extremely effective java exploit.

Second up:

4e466b7c405c14f15606420d04590f540451020d020007580155005302530355

5faee8c1d7a9b0e5e6ea52720a958794

3/41 detections

Kaspersky

HEUR:Exploit.Java.CVE-2012-4681.gen

And here we have the the Java 0-day.

As for the payload, we saw this medfos served up at the end.

2/41 detections

Fortinet

W32/Medfos.ALI!tr

Traffic generated by Medfos looked like this: http://IP

/file/id=BwBwAAEAOuxqCQEFABcAAABWAAAAAAAAAAAAAACMDA... (truncated due to bad bad formatting)

Always interesting to see what shows up after a successful encounter.

Reading Daryl's writeup again, he goes into the deobfuscation and tears through the kit, which is awesome. If you're not following him on twitter (@kahusecurity) or reading his blog and you're in security, you need to do it NOW! (Seriously now!)

It would be interesting to see if there is a way, like Emerging Threats has done with the Blackhole kit, to build a sig off the number of landing page.

Granted the way we've been attacking it is building a rule off of exploit delivery URI, load URI, and post-load URI patterns.

Exploit sig:

alert tcp $HOME_NET any -> $EXTERNAL_NET $HTTP_PORTS (msg:"CURRENT_EVENTS Neosploit Exploit URI Request (by bare query parameter

pattern)"; flow:established,to_server; content:"/?"; http_uri;

pcre:"/\/\?\d[0-9a-f]{50,68}$/U"; classtype:attempted-user;

reference:url,www.google.com; sid:*******; rev:2; )

Load URI sig:

alert tcp $HOME_NET any -> $EXTERNAL_NET $HTTP_PORTS (msg:"CURRENT_EVENTS Neosploit Load URI Request (by bare query parameter

pattern)"; flow:established,to_server; content:"/?"; http_uri;

content:"|3b|"; distance:0; http_uri; content:"|3b|"; distance:0;

http_uri; pcre:"/\/\?\d[0-9a-f]{50,68}\;\d+\;\d+$/U";

classtype:attempted-user; reference:url,www.google.com; sid:*****;

rev:2; )

Post-load URI sig:

alert tcp $HOME_NET any -> $EXTERNAL_NET $HTTP_PORTS (msg:"CURRENT_EVENTS Neosploit Post-Load URI Request (by bare query parameter

pattern)"; flow:established,to_server; content:"/?"; http_uri;

content:"|3b|"; distance:0; http_uri; content:"|3b|"; distance:0;

http_uri; content:"|3b|"; distance:0; http_uri;

pcre:"/\/\?\d[0-9a-f]{50,68}\;\d+\;\d+\;\d+$/U";

classtype:attempted-user; reference:url,www.google.com; sid:******;

rev:2; )

Again, these are experimental rules.

More on NeoSploit as I can find it.

-Paul

@demon117

Labels:

0-day,

attack,

criminal,

cybercrime,

exploit,

Exploit Kit,

Exploit Pack,

Java,

malware,

medfos,

NeoSploit,

research,

security,

threat,

threatintel,

webbased

Sunday, August 12, 2012

The "2 hit" kit

I dub this unknown kit/pack as the "2hit kit" and here is why.

The kit is extremely "simple" looking, there are two interactions with the malicious domain that serve up an exploit and then a payload.

Example:

evildomain[.]info/0516 (exploit)

evildomain[.]info/07893 (payload)

Could it be that simple?

No, however I haven't been able to find and document the missing link in the malicious traffic. My assumption of the traffic is a compromised site (possibly outdated/exploited wordpress or something along those lines) serving up the malicious JavaScript that leads to the victims system's JRE connecting to the malicious domain.

Unfortunately this has been evading me so these are my unconfirmed assumptions.

I will be editing this or adding another post with a theoretical Snort signature for this kit.

My RegEx logic: (Splunk with proxy logs)

uri_path="/0*" user_agent=*java* | regex uri_path="^/0\d{3,4}$"

Currently the logic for the signature will be based around catching the Java/1. user agent string in the header, moving into the regex for the number. There is much work to be done on it.

There is much data to sift through and sites to plug at when I have the chance.

Until I have more.

-Demon

@demon117

The kit is extremely "simple" looking, there are two interactions with the malicious domain that serve up an exploit and then a payload.

Example:

evildomain[.]info/0516 (exploit)

evildomain[.]info/07893 (payload)

Could it be that simple?

No, however I haven't been able to find and document the missing link in the malicious traffic. My assumption of the traffic is a compromised site (possibly outdated/exploited wordpress or something along those lines) serving up the malicious JavaScript that leads to the victims system's JRE connecting to the malicious domain.

Unfortunately this has been evading me so these are my unconfirmed assumptions.

I will be editing this or adding another post with a theoretical Snort signature for this kit.

My RegEx logic: (Splunk with proxy logs)

uri_path="/0*" user_agent=*java* | regex uri_path="^/0\d{3,4}$"

Currently the logic for the signature will be based around catching the Java/1. user agent string in the header, moving into the regex for the number. There is much work to be done on it.

There is much data to sift through and sites to plug at when I have the chance.

Until I have more.

-Demon

@demon117

Labels:

2hit,

attack,

criminal,

cybercrime,

Exploit Kit,

Exploit Pack,

infosec,

Java,

malware,

research,

security,

threat,

threatintel,

webbased

Subscribe to:

Comments (Atom)